Por Camilo Salas

January 20, 2023

AI has been around since our earliest stories. According to Greek mythology, Talos was a bronze giant who guarded the island of Crete. In the 19th century, Frankenstein began to popularize a subgenre of conscious creatures in science fiction that continues to this day. We know that an evil AI can betray you (2001: A Space Odyssey), it can exist without knowing its artificial condition (Blade Runner), or it can make you fall in love (Her).

We may reach one of these scenarios someday. Meanwhile, in the real world, the most popular thing in AI is deep learning models, a subcategory of machine learning designed to analyze data with logical structures similar to humans. It works through models, which is how the algorithm recognizes these structures.

This technology—which has been around for a long time but has shown its full capacity in recent years—is behind recent developments such as Dall-E or Midjourney, which are used to generate images from text descriptions. And is behind the famous ChatGPT, capable of understanding the context of a conversation, creating works of fiction, performing mathematical operations, and even writing code.

These models can predict linguistic responses and therefore create images and videos by guessing the following picture. Thanks to the world’s most extensive library (the Internet), they train themselves and convincingly determine what comes next, which word or which pixel.

It’s estimated that 80 percent of current artificial intelligence research is based on these models. A record $115 billion was invested in these companies in 2021.

But just four years ago, the results delivered by these models were not good.

Runway, a web application that uses deep learning models for editing and creating videos, was built in 2016 by Alejandro Matamala and Cristobal Valenzuela, two Chileans who met while studying Art and Technology at New York University, together with the Greek Anastasis Germanidis, who was also part of the same program.

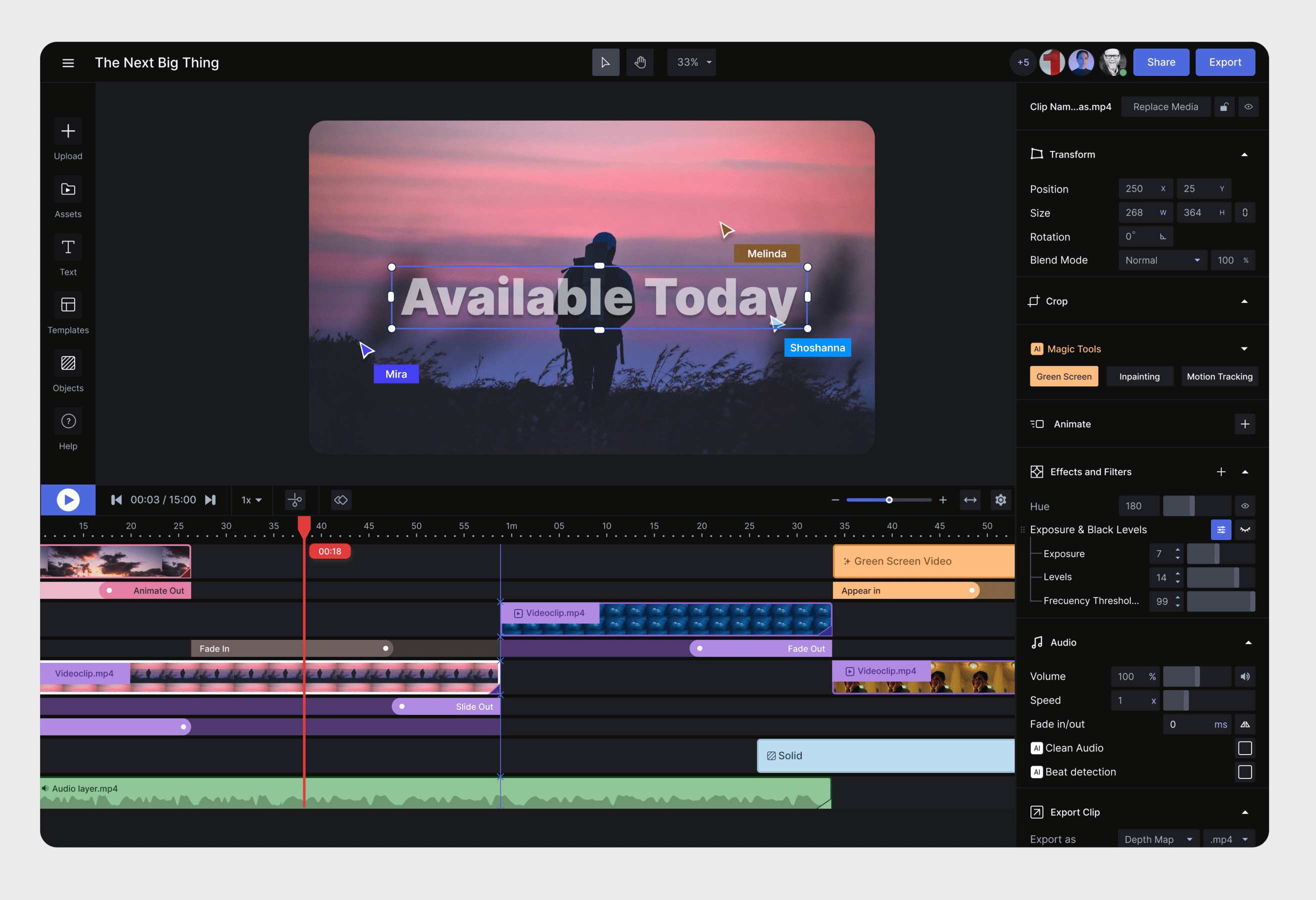

The importance of Runway and similar apps is that their technology will revolutionize many aspects of video editing and creation. “In 2018, no one believed us,” says Matamala while smiling like someone who knows a secret.

“Everyone questioned that computers weren’t going to be able to be creative or generate visual ideas. To be fair, in 2018, the models’ quality wasn’t the best, so it was hard to convince anyone. But the potential was there. And now, six months ago, the game changed entirely because we see very good results. And many people don’t see it as something that will change but as something that is already part of their tools”.

Runway’s video editor is used by productions such as The Late Show With Stephen Colbert.

Among Runway’s clients are several advertising agencies that use image generation to create sketches, generate ideas, and final productions. There are television programs, such as Stephen Colbert’s Late Night Show, that use Runway on a daily basis to produce sketches. In an interview, the team behind the show’s special effects details how processes that used to take hours now only take minutes, from cutting a character to putting it on another background (work that used to be done by hand and frame by frame), to color correction, motion tracking, image generation, to disappearing an object entirely from a video.

Runway has also been used in films such as the award-winning “Everything Everywhere All at Once,” which used the green screen tool in several scenes.

“They did several different things in the VFX area,” says Alejandro. “They are professionals, but they don’t have a Hollywood budget. And that’s what we like the most. The next Netflix show is from any of us,” he adds referring to creators like him. (By the way, Runway is geared toward companies and the general public).

Halfway through the unicorn

Last December, Runway announced the closing of a $50 million investment round, in which it was valued at $500 million. As important as the amount was the fund that led the financing, Felicis, a Californian VC, behind several companies that, after its backing, have become unicorns.

“We’re halfway through the unicorn,” Alejandro says. “But raising capital today is because we want to grow. We have a research team that is training and generating models generating research. We also work on infrastructure, like deploying the research that can lead to the product. And then we have the last layer, which is what the user sees.”

Alejandro Matamala, cofundador de Runway. (Foto: Camilo Salas)

And while allocating resources to research is something that many companies in the industry tend to do, its open-source technology sets Runway apart. They are the main company that originated Stable Diffusion, an Open Source image generation model that was born by one of the Runway’s researchers in collaboration with the Computer Vision and Learning team at LMU, Ludwig Maximilian University of Munich. Many other companies now use it to support their own products.

“We are creating new models and doing things that benefit our product but could eventually benefit others,” says Matamala.

Not bad for a group of founders who met while studying and who, despite coming from different backgrounds (Matamala, design and editorial publishing; Germanidis, computer science, and art; Valenzuela, commercial engineering), transformed an initial thesis idea, which was to simplify existing artificial intelligence tools in 2018 to work on the web, into a company.

Even then, and with only three models, a post on Twitter generated interest from users, which earned them a research residency at NYU dedicated to the potential uses of AI in the creative world. Soon after, they created a prototype. Today Runway has offices in Manhattan and 40 employees.

But finding talent and growing the company was challenging at first. Matamala believes the company could hardly have been born in his native Chile, especially when finding investors. “It’s more complicated to convince people in Latin America, which takes a little longer. And also because here (in New York), there’s a concentration of talent that is difficult to find in LatAm, in different areas such as research, engineering, and design. But we are a well-distributed company, we have people in Chile, we have people in Canada, New Zealand, and part of Europe, but being here brings us a benefit”.

He adds that in Latin America, and especially in Chile, a market he knows, there are cases of successful startups such as Fintual and Cornershop, but he also believes that the syndrome of being a copy of ideas that already exist has yet to be overcome. Many startups are Latin American versions of companies that already exist in the U.S.

He also has encountered difficulty reaching Latin American talent. “We were looking for engineers and designers, but there is no clear way to reach them in Argentina and Chile. Here it’s easier. There are a lot of small platforms for designers or recruiters that are specialized”.

But uncertainties do not only occur with hiring.

As with new technologies, there is debate about the ethical and artistic implications of images and videos generated by artificial intelligence. In a recent op-ed in the Los Angeles Times, artist Molly Crabapple pointed out that these models are faster and cheaper than humans and that their images are so good that they have already been used in book covers and editorial illustrations, jobs that a few months ago a graphic designer or illustrator used to do.

“When Photoshop came along, they were also saying, ‘we’re not going to believe what’s real anymore; this isn’t art.’ Twenty years later, we saw that an economy was created based on this. There are people today who no one would not consider digital artists, and their working tool is Photoshop. Now, [AI] is a paradigm shift where you can create many new things. I see it more as a benefit to the creators. It’s a tool,” Alejandro assures.

As I take the elevator down to the streets of Chinatown after talking with Alejandro, I keep thinking that these new tools and debates are as old as the professions they come to revolutionize. Artists, illustrators, writers, the academic community, and now video editors.

The critical thing to remember is that these technologies are there simply to help us capture our creativity and tell stories.

Stories like this one, written by a human, that may soon be co-written with another intelligent entity.

Main image: Runway founders Anastasis Germanidis, Alejandro Matamala and Cristóbal Valenzuela (Photo: Runway).

You may also be interested in: Are Startups Abusing the AI Concept?

Por Stiven Cartagena

January 12, 2026